Traefik Ingress

What is it?

From Official website:

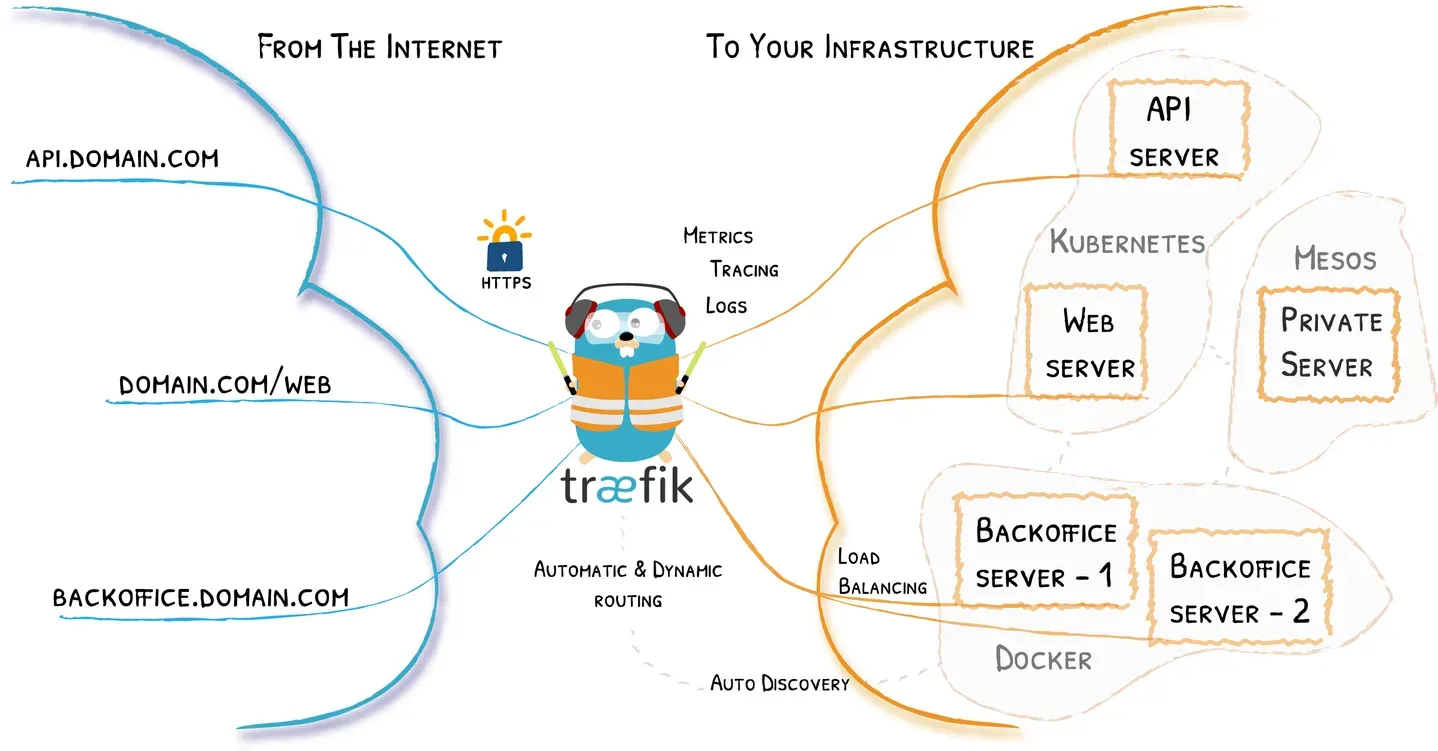

Traefik is an open-source Edge Router that makes publishing your services a fun and easy experience. It receives requests on behalf of your system and finds out which components are responsible for handling them.

It's basically a bit more advance proxy server for your Kubernetes cluster. Furthermore, it takes domain names and routes them to the correct containers. The image that I shamelessly borrowed from their documentation explains it well.

Inside my home cluster, I prefer metallb to assign external IPs to my services. Mainly because I don't have to deal with DNS. On the other hand, Traefik requires DNS to be configured. If you installed the k3s same as me, you should have Traefik already configured and with assigned IP, in my case its 192.168.0.200.

You can check that with:

root@control01:~# kubectl get svc -n kube-system | grep traefik

traefik LoadBalancer 10.43.159.145 192.168.0.200 80:31225/TCP,443:32132/TCP 36dThere is a very high chance you do not run your own manageable DNS server in your network, although you might. If you do, setup it to route domain cube.local to the IP of the Traefik service. Again, in my case, it's 192.168.0.200. I'm not going over how to set up a custom DNS server in home, but if you realy realy need one, maybe look at Technitium. I have it in a container and use it from time to time.

But in this example, I'm not going to use host file.

- Windows:

C:\Windows\System32\drivers\etc\hosts - Linux:

/etc/hosts

They work the same, it's the first place where OS is looking for name resolution, usually it looks in this file first and then do a DNS server query to obtain IP from domain name.

I'm going to add to this file at the end of this file:

192.168.0.200 cube.localNow, when you type http://cube.local in your browser, it will be resolved to our Traefik Ingress. We basically need to simulate DNS entry.

Now we can create Ingress resources in Traefik, like: cube.local/ui, cube.local/grafana and route them to their appropriate containers.

ui.cube.local, grafana.cube.local. You need to add them to the line 192.168.0.200 cube.local. For example, 192.168.0.200 cube.local ui.cube.local grafana.cube.local to make that work. In normal DNS server you just throw * for that A record, and you are done...ClusterIP. We are targeting the name of the service and port. Important is, that we have to create the Ingress resource in the same namespace as the service we want to export, or it will complain that Traefik can't find the service.How to use Traefik

Let's look what I have in K3s services already. No need to invent a wheel when we can use something we already have running.

root@control01:~# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 37d

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 37d

kube-system metrics-server ClusterIP 10.43.254.144 <none> 443/TCP 37d

kube-system traefik LoadBalancer 10.43.159.145 192.168.0.200 80:31225/TCP,443:32132/TCP 37d

openfaas basic-auth-plugin ClusterIP 10.43.6.51 <none> 8080/TCP 36d

openfaas prometheus ClusterIP 10.43.98.240 <none> 9090/TCP 36d

openfaas nats ClusterIP 10.43.113.234 <none> 4222/TCP 36d

openfaas alertmanager ClusterIP 10.43.19.176 <none> 9093/TCP 36d

openfaas gateway ClusterIP 10.43.139.78 <none> 8080/TCP 36d

openfaas gateway-external NodePort 10.43.142.141 <none> 8080:31112/TCP 36d

openfaas openfaas-service LoadBalancer 10.43.2.41 192.168.0.203 8080:31682/TCP 36d

openfaas-fn cows ClusterIP 10.43.173.223 <none> 8080/TCP 36d

openfaas-fn mailme ClusterIP 10.43.86.175 <none> 8080/TCP 36d

kube-system kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 35d

openfaas-fn text-to-speach ClusterIP 10.43.2.24 <none> 8080/TCP 28d

logging loki-stack-headless ClusterIP None <none> 3100/TCP 22d

logging loki-stack ClusterIP 10.43.16.221 <none> 3100/TCP 22d

argocd argocd-applicationset-controller ClusterIP 10.43.7.236 <none> 7000/TCP,8080/TCP 10d

argocd argocd-dex-server ClusterIP 10.43.231.214 <none> 5556/TCP,5557/TCP,5558/TCP 10d

argocd argocd-metrics ClusterIP 10.43.79.79 <none> 8082/TCP 10d

argocd argocd-notifications-controller-metrics ClusterIP 10.43.47.65 <none> 9001/TCP 10d

argocd argocd-redis ClusterIP 10.43.98.160 <none> 6379/TCP 10d

argocd argocd-repo-server ClusterIP 10.43.61.116 <none> 8081/TCP,8084/TCP 10d

argocd argocd-server-metrics ClusterIP 10.43.234.98 <none> 8083/TCP 10d

argocd argocd-server LoadBalancer 10.43.240.143 192.168.0.208 80:30936/TCP,443:32119/TCP 10d

redis-server redis-server LoadBalancer 10.43.9.40 192.168.0.204 6379:31345/TCP 36d

docker-registry registry-service LoadBalancer 10.43.185.225 192.168.0.202 5000:31156/TCP 36d

longhorn-system longhorn-backend ClusterIP 10.43.122.67 <none> 9500/TCP 37d

longhorn-system longhorn-engine-manager ClusterIP None <none> <none> 37d

longhorn-system longhorn-admission-webhook ClusterIP 10.43.99.110 <none> 9443/TCP 9d

longhorn-system longhorn-replica-manager ClusterIP None <none> <none> 37d

longhorn-system longhorn-conversion-webhook ClusterIP 10.43.55.126 <none> 9443/TCP 9d

longhorn-system csi-attacher ClusterIP 10.43.68.75 <none> 12345/TCP 9d

longhorn-system csi-provisioner ClusterIP 10.43.223.201 <none> 12345/TCP 9d

longhorn-system csi-resizer ClusterIP 10.43.96.164 <none> 12345/TCP 9d

longhorn-system csi-snapshotter ClusterIP 10.43.9.60 <none> 12345/TCP 9d

longhorn-system longhorn-frontend LoadBalancer 10.43.111.132 192.168.0.201 80:32276/TCP 37d

monitoring kube-state-metrics ClusterIP None <none> 8080/TCP,8081/TCP 34d

monitoring node-exporter ClusterIP None <none> 9100/TCP 35d

monitoring grafana LoadBalancer 10.43.9.110 192.168.0.206 3000:31800/TCP 34d

monitoring prometheus-operator ClusterIP None <none> 8080/TCP 8d

portainer portainer NodePort 10.43.152.232 <none> 9000:30777/TCP,9443:30779/TCP,30776:30776/TCP 5d18h

portainer portainer-ext LoadBalancer 10.43.79.201 192.168.0.207 9000:32244/TCP 5d18h

argo-workflows argo-workflow-argo-workflows-server LoadBalancer 10.43.255.184 192.168.0.210 2746:30288/TCP 5d17h

monitoring prometheus-operated ClusterIP None <none> 9090/TCP 5d4h

vault-system vault-internal ClusterIP None <none> 8200/TCP,8201/TCP 4d16h

vault-system vault ClusterIP 10.43.38.160 <none> 8200/TCP,8201/TCP 4d16h

vault-system vault-ui ClusterIP 10.43.130.54 <none> 8200/TCP 4d16h

vault-system vault-agent-injector-svc ClusterIP 10.43.1.84 <none> 443/TCP 4d16h

monitoring prometheus-external LoadBalancer 10.43.137.142 192.168.0.205 9090:32278/TCP 5d4h

monitoring prometheus ClusterIP 10.43.67.66 <none> 9090/TCP 5d4h

vault-system vault-ui-fix-ip LoadBalancer 10.43.36.179 192.168.0.211 8200:32672/TCP 3d16h

vault-system vault-fix-ip LoadBalancer 10.43.138.189 192.168.0.212 8200:30635/TCP,8201:32551/TCP 3d16h

kube-system sealed-secrets ClusterIP 10.43.27.41 <none> 8080/TCP 3d4h

external-secrets external-secrets-webhook ClusterIP 10.43.150.121 <none> 443/TCP 3d3hUgh!, that's a lot already setup on my cluster, but if you followed my guide you should have this one:

longhorn-system longhorn-frontend LoadBalancer 10.43.111.132 192.168.0.201 80:32276/TCP 37dWell, that's a service alright, but not the type we need. We need an internal ClusterIP service. I'm going to describe the longhorn-frontend service and take some info from it for new clusterIP service.

root@control01:~# kubectl describe service longhorn-frontend -n longhorn-system

Name: longhorn-frontend

Namespace: longhorn-system

Labels: app=longhorn-ui

app.kubernetes.io/instance=longhorn-storage-provider

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=longhorn

app.kubernetes.io/version=v1.3.0

helm.sh/chart=longhorn-1.3.0

Annotations: <none>

Selector: app=longhorn-ui

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.111.132

IPs: 10.43.111.132

IP: 192.168.0.201

LoadBalancer Ingress: 192.168.0.201

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 32276/TCP

Endpoints: 10.42.4.73:8000

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>What can we take from this?

- Selector: app=longhorn-ui - This is telling us that the service is choosing pod where the UI is by the label

app=longhorn-ui. - Endpoints: 10.42.4.73:8000 - The IP here is not important, but the port is. 8000 is the port exposed by the running pod, where the UI is running.

Base on these two information, we can construct new service.

longhorn-internal-svc.yaml

---

kind: Service

apiVersion: v1

metadata:

name: longhorn-int-svc

namespace: longhorn-system

spec:

type: ClusterIP

selector:

app: longhorn-ui

ports:

- name: http

port: 8000

protocol: TCP

targetPort: 8000This is the minimal configuration needed for this service. After we apply this configuration, It will create ClusterIP service targeting pod with app=longhorn-ui label and expose port 8000. I have also named the port http to make it easier to refer back later.

root@control01:~# kubectl apply -f longhorn-internal-svc.yaml

service/longhorn-int-svc created

root@control01:~# kubectl get svc -n longhorn-system | grep longhorn-int-svc

longhorn-int-svc ClusterIP 10.43.34.5 <none> 8000/TCP 80s

root@control01:~# kubectl describe svc longhorn-int-svc -n longhorn-system

Name: longhorn-int-svc

Namespace: longhorn-system

Labels: <none>

Annotations: <none>

Selector: app=longhorn-ui

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.43.34.5

IPs: 10.43.34.5

Port: http 8000/TCP

TargetPort: 8000/TCP

Endpoints: 10.42.4.73:8000

Session Affinity: None

Events: <none>Now, I'm sure you are asking. Vladimir, I know you managed to use 2 in binary code, but how do I expose this via Traefik ?

We need to create a Ingress object definition. To tell Traefik how, what and where to expose. Let's do it now. File longhorn-ingress-traefik.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: longhorn-ing-traefik

namespace: longhorn-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: "longhorn.cube.local"

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: longhorn-int-svc

port:

number: 8000root@control01:~# kubectl apply -f longhorn-ingress-traefik.yaml

ingress.networking.k8s.io/longhorn-ing-traefik configuredMake sure you have something like 10.0.40.10 cube.local longhorn.cube.local in your host file, for this to work. When you go to that URL http://longhorn.cube.local/ you should see the UI.

New way to work with Traefik

Above work in this simple manner, if you want to expose the service on something.domain.url but what if you want to expose it on domain.url/longhorn for example ?

I mean, you can try this:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: longhorn-ing-traefik

namespace: longhorn-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: "cube.local" #<-- See here

http:

paths:

- path: /longhorn #<-- See here

pathType: Prefix

backend:

service:

name: longhorn-int-svc

port:

number: 8000But that would only work for some simple services. Specifically with longhorn UI, you will face an issue where the page starts to load, but the service is expecting to be on the root path. You get missing CSS or scripts, because the longhorn UI will look for them in domain.url/css... and not domain.url/longhorn/css....

There is a different way to expose service via Traefik.

Routers, Middlewares, Services

New Traefik v.2.x added CRDs to your Kubernetes. Custom Resource Definitions (CRDs) are a way to define a new type of resource in your cluster. In this case, we are going to define a new type of resource called IngressRoute.

File: longhorn-IngressRoute-traefik.yaml

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: longhorn-ing-traefik

namespace: longhorn-system

spec:

entryPoints:

- web

routes:

- match: Host(`cube.local`) && PathPrefix(`/longhorn`)

kind: Rule

services:

- name: longhorn-int-svc

port: http

middlewares:

- name: longhorn-add-trailing-slash

- name: longhorn-stripprefixAlthough it looks similar, there is lots to unpack.

- apiVersion - Here we are calling specifically Traefik API extension of our Kubernetes cluster.

- kind - Here we are defining the type of resource we are going to create. In this case, we are creating

IngressRoute. Which is specific for this CRD type. - metadata - The same as before, name of the

IngressRouteandnamespace. - entryPoints - New thing in our definition,

webmeans port 80 in our case. This is pre-defined in Traefik. But you can create your own entry points. Also, for example, the other pre-defined entry point iswebsecurewhich basically means port 443. As mentioned, you can create a YAML file with your ownentry pointand specify various parameters for it. Read more here EntryPoints - match - What we are matching with this routing rule. In this case, we are matching with

Host(cube.local)andPathPrefix(/longhorn). Sohttp://cube.local/longhorn/will be routed tolonghorn-int-svcservice. - services - This is the service we are going to expose. Same as before, except I did not specify port just its name, you can write it the same as before with port number if you like.

- middlewares - Another new thing in our definition.

middlewaresare modifications. You can apply more than one, but they are going into the effect only after the match already happened, and before forwarding further to the service. This modification can be for example to removewwwfrom URL, add custom headers or add list of IPs allowed to connect and so on. We will create our own middleware calledlonghorn-stripprefixandonghorn-add-trailing-slashto deal with the css request issues. More info about middlewares.

Middlewares

File: longhorn-middleware1-traefik.yaml

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: longhorn-add-trailing-slash

namespace: longhorn-system

spec:

redirectRegex:

regex: ^.*/longhorn$

replacement: /longhorn/We are going to use redirectRegex type of middleware to redirect all requests to /longhorn to /longhorn/. I'm not great at regex, so I hope this is ok, what we need is that longhorn is expecting the domain to end with / to work properly. But if we define the prefix just as /longhorn it will not work. If we define it as /longhorn/ it will work but any requests to /longhorn would get 404. Therefore, this first modification will add / at the end. I kind of feel that this is fixing issue with application, not actual issue with Traefik...

File: longhorn-middleware2-traefik.yaml

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: longhorn-stripprefix

namespace: longhorn-system

spec:

stripPrefix:

prefixes:

- /longhornHere we will remove /longhorn from the URL, that is reaching the service. This way, Longhorn should think its receiving request to domain.url/ and not domain.url/longhorn/, which in many cases could cause issues.

Not sure if it's better to keep Middleware definition in a separate file, that can cause tracking issue what is for what, on other hand you can reuse middleware definition in multiple IngressRoute definitions.

Apply all:

kubectl apply -f longhorn-IngressRoute-traefik.yaml

kubectl apply -f longhorn-middleware1-traefik.yaml

kubectl apply -f longhorn-middleware2-traefik.yamlAnd now I can access longhorn UI on http://cube.local/longhorn or http://cube.local/longhorn/ both works fine. I honestly do not know if this is actually the correct way to do it in this case, but it works.

In any case, I will most likely replace Traefik with some proper service mesh option in the future. Or soon as possible. Expect some info about that soon.

Did you like this article? Have a drink, and maybe order one for me as well 🙂.